AI assistants are rapidly becoming essential to how businesses manage communication, especially in support and operations. From suggesting email replies to handling entire conversations, they allow teams to scale without increasing workload, and customers get faster, more accurate service in return.

But how exactly do AI assistants work behind the scenes? What powers their ability to understand, respond, and improve over time?

Let’s break it down.

What are AI Assistants?

AI assistants are software systems that process natural language, retrieve relevant information, and take action, either by generating content, automating tasks, or responding directly to users.

Unlike chatbots that follow rigid if-this-then-that rules, modern AI assistants are powered by language models, machine learning, and retrieval systems. They don’t just match keywords; they interpret context, prioritize urgency, and improve with use.

📊 According to a research conducted by Servion Global Solutions, 95% of customer interactions will be powered by AI by 2025, a clear sign that AI assistants are not a luxury, but a core layer in support infrastructure.

👉 You can explore a full breakdown of what is an AI assistant, and how it differs from basic chatbots in this Gmelius blog.

Key Technologies Powering AI Assistants

Building an AI assistant requires more than just an LLM (Large Language Model). Here are the critical components that make modern assistants smart, fast, and reliable:

- Natural Language Processing (NLP):

Breaks down sentences into their components (tokens, parts of speech) to understand what the user means, not just what they say. - Large Language Models (LLMs):

These are the core engines (like OpenAI’s GPT-4 or Claude) that generate responses. They’ve been trained on billions of examples to understand grammar, intent, and style. - Retrieval-Augmented Generation (RAG):

Rather than rely solely on training data, RAG fetches relevant information from a connected source (your help docs, for example) and feeds it to the LLM. This makes responses more accurate and context-aware. - Intent classification & entity extraction:

Determines what the user wants to do (e.g., “cancel subscription”) and pulls any key info (e.g., account ID) to carry out the task. - Knowledge graphs:

Structured databases that map relationships between things, like which customers qualify for what tier, or which policies apply to which regions. These help assistants reason through more complex scenarios. - Sentiment & emotion analysis:

Gauges whether the user is frustrated, confused, or happy, which is essential for prioritizing or adapting tone. - Reinforcement learning from human feedback (RLHF):

AI learns which responses are helpful based on upvotes, downvotes, or follow-up corrections. This closes the loop and continuously improves the assistant. - Text-to-speech and speech-to-text:

Enables voice interactions in apps, phone support, or smart devices. - Secure vector embedding stores:

Store knowledge base content as vectors (semantic meaning, not just words), enabling fast, private semantic search inside your own cloud environment. - Tool & API orchestration:

The assistant may need to call APIs, trigger workflows, or pull data from third-party tools. Orchestration frameworks manage this interaction securely.

Step-by-Step: How an AI Assistant Responds to a Query

AI assistants may seem magical, but under the hood, they follow a clear technical pipeline. Here's how the process typically works:

1. Input collection

The assistant starts with user input, which can come from various channels: a typed email, a live chat message, a contact form, or voice through a call center interface. Each of these inputs is captured through APIs or integrated widgets and routed into the assistant’s processing pipeline. Input formats are standardized into a structured request for downstream tasks, often including session metadata (timestamp, channel, user ID) for contextual awareness.

2. Speech recognition (if the input includes voice)

When the user speaks instead of typing, the system engages an Automatic Speech Recognition (ASR) engine to convert audio signals into text. This process involves waveform analysis, phoneme detection, and language modeling. Modern ASR systems often use deep learning models (like Wav2Vec 2.0 or Whisper) trained on multilingual and domain-specific datasets to improve transcription accuracy in noisy environments or with non-native accents.

3. Preprocessing

Raw text is normalized to handle variations in spelling, tense, and formatting. This includes tokenization, lemmatization (reducing “running” to “run”), and expansion of abbreviations or slang (“tmrw” → “tomorrow”). Personally identifiable information (PII) like email addresses or account numbers is redacted or masked, both for privacy and to avoid skewing downstream model predictions. The result is a clean, semantically rich input ready for intent parsing.

4. Intent detection

A trained intent classifier (typically built on transformer models like BERT or RoBERTa) maps user input to a predefined intent class. For example, “Can I check my invoice from last month?” may be tagged with the intent billing_view_invoice. Confidence thresholds are enforced to reduce ambiguity, and fallback responses (e.g., “Can you clarify your request?”) are triggered when uncertainty exceeds a set limit. Multilingual and multi-intent capabilities may also be supported depending on the assistant’s scope.

5. Entity extraction

Using Named Entity Recognition (NER), the assistant identifies structured data points, such as order numbers, product SKUs, date ranges, or locations. These entities are annotated and stored in a context object that persists through the conversation. Extraction models may be rule-based (regex) or ML-based, depending on the complexity of the entity types and domain specificity. These values are critical for API calls, form autofill, or contextual lookup.

6. Context retrieval

With intent and entities in hand, the assistant queries relevant data sources, such as a vector database of knowledge base articles (using embeddings via OpenAI or Cohere), customer relationship management (CRM) records, or past conversation transcripts. Semantic similarity search ensures that not only keyword matches but conceptually related entries are retrieved. The assistant builds a context stack to reference during decision-making and response generation.

7. Decision-making

Based on the intent, entities, and retrieved context, the assistant chooses the next action. This could be a hard-coded rule (e.g., “if intent = reset_password → trigger workflow”), or a dynamic policy engine driven by reinforcement learning or decision trees. Advanced systems may incorporate AI planning algorithms to sequence multiple actions. If escalation is required (e.g., intent is sensitive or complex), the assistant routes the query to a human with pre-filled context.

8. Response generation

The assistant now crafts a response using Retrieval-Augmented Generation (RAG). This combines retrieved data (e.g., a help article or user profile) with a language model like GPT to generate personalized, natural language replies. For transactional intents, it may call APIs to fetch live data (e.g., order status) or initiate backend processes. Responses are formatted for tone, length, and clarity, often using few-shot prompting to stay aligned with company voice.

9. Guardrails

Before sending, the system performs several safety checks. These include policy filters (e.g., prevent sending credentials), tone analysis (ensure the assistant doesn’t sound rude or dismissive), and human-in-the-loop checkpoints for flagged categories (e.g., legal, compliance, VIP customers). Toxicity classifiers, PII detectors, and rate limiters help ensure responsible AI behavior. Logging occurs in parallel for compliance and auditing.

10. Delivery + Logging

Once approved, the assistant delivers the reply via the same channel it came from—email, chat, or voice. Simultaneously, it logs the session metadata, user satisfaction scores (via thumbs-up or CSAT prompts), and key metrics such as time to first response (TTFR) or resolution time. This data feeds back into the training pipeline, where supervised fine-tuning or RLHF (Reinforcement Learning from Human Feedback) improves future performance.

Over time, this loop improves. New articles are indexed, new patterns are learned, and feedback updates the model. The result? A smarter assistant that keeps up with your product, customers, and workflows.

Curious about the broader impact? Learn more about how AI assistants help businesses improve efficiency, reduce costs, and scale smarter.

Real-World Examples: AI Assistants in Gmail

With platforms like Gmelius, AI assistants are embedded directly inside Gmail. You don’t need a new app, just smarter email.

Gmelius AI assistants go beyond surface-level automation. They can securely connect to your internal documentation, help center, or knowledge base to deliver responses grounded in your company’s actual workflows and language.

Once connected, the assistant builds a secure, private vector index of your content—fully encrypted and never shared or used to train external models. Powered by Google’s Gemini AI and deeply embedded in Gmail, the assistant continuously updates its understanding every week, ensuring it reflects your latest processes, policies, and team knowledge with precision and privacy.

Here are two practical examples:

Gmelius email reply assistant

This assistant drafts smart replies to incoming emails based on the conversation thread, saved templates, and company knowledge base. It understands tone, intent, and urgency, therefore, human agents can simply review and send.

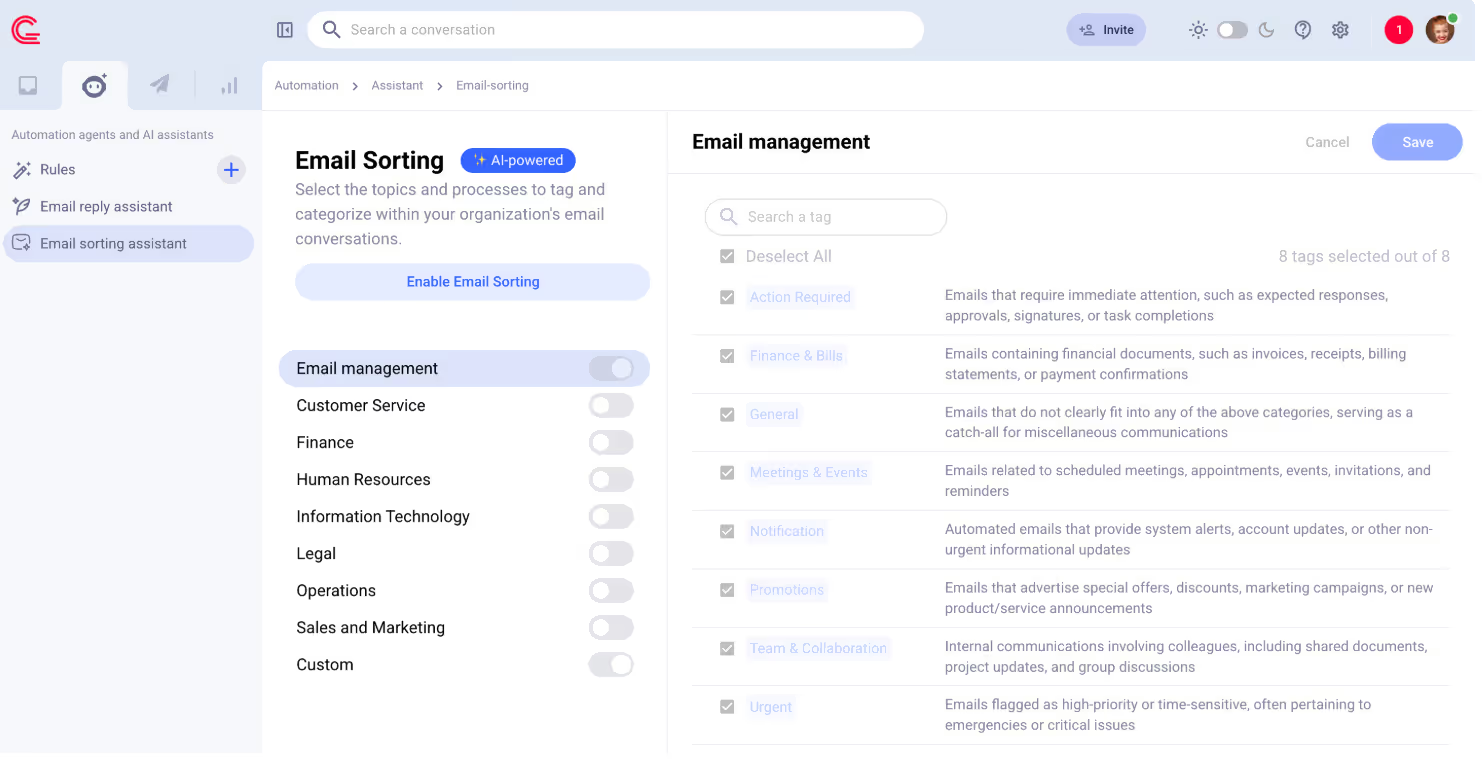

Gmelius email sorting assistant

Automatically classifies emails (billing, support, feedback, etc.) and routes them to the right person or tag. Teams eliminate manual triage and reduce reply time across the board.

How to Make AI Assistants Work for You

If you’re thinking about adding an AI assistant to your support or operations workflow, keep these best practices in mind:

1. Start with repetition

Identify the 3–5 tasks you repeat most: “Where’s my order?”, “How do I reset my password?”, “Can I get a copy of my invoice?” These are easy wins for automation.

2. Use your own knowledge

Upload internal docs, product manuals, and FAQ content. This ensures your assistant gives answers in your language, using accurate info.

3. Keep humans in the loop

Always allow for agent approval on complex or sensitive replies, especially during the first phase. AI should assist, not replace, your team.

4. Track performance

Look at metrics like:

- First response time (FRT)

- Time to resolution (TTR)

- Deflection rate (how many queries were handled without agent involvement)

5. Refresh often

Set a recurring update schedule so your assistant pulls in any new help center content, product changes, or policy shifts.

6. Improve prompting over time

As the assistant handles more queries, look at which ones it fails to answer well. Refine your training materials and prompts to close those gaps.

Conclusion

AI assistants are already part of the daily workflow in thousands of support teams, SaaS companies, and ecommerce operations. When connected to your actual knowledge base and governed with the right guardrails, they reduce workload, improve customer experience, and help teams grow smarter.

What used to require hours of human effort (routing messages, pulling data, responding with consistency) is now handled in seconds by intelligent assistants that learn, adapt, and scale with your business.

Whether you're a growing startup or an established team buried under email, the message is clear: if you’re still doing it all manually, you’re already behind.

With tools like Gmelius AI Assistants, you don’t need to overhaul your stack or build your own solution. You just plug it into Gmail—and let it work.

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)